Google’s Core Updates Explained: What They Are & How to Prepare for Them

SEO is a bit of a strange concept to people not working within the industry, and it’s easy to get frustrated with an SEO expert when “I don’t know” is their response to “Why are my rankings dropping?”

KPIs, like keyword rankings, are undoubtedly important for measuring campaign success, and getting upset by a sudden drop in rankings is certainly a valid response, but remember, your SEO specialist needs time to figure out possible causes. When you hear “I don’t know,” the underlying message is “I don’t know, but I will find out.”

I discuss ranking drops a little bit in this blog, but I want to highlight again that one of the most common causes of sudden drops is Google testing various keywords or releasing a planned algorithm update.

Google frequently pushes several types of updates each year: core, spam, reviews, and helpful content.

Core Updates Versus Other Algorithm Updates

Here’s a basic breakdown of each type of algorithm change:

Core

A core update is the big boss of Google algorithm updates. It’s the ones that make SEO experts quiver in their boots when they know one is on the way or in progress. Core updates are the most mysterious to SEO experts and often cause the most volatility. Nobody really knows the purpose of each specific core update because Google never explicitly announces what will be impacted. In general terms, a core update involves modifying the way Google’s algorithm interprets queries and what type of content to populate based on search requests. It’s up to SEO experts to analyze SERPs before, during, and after these updates to determine what could have been impacted.

The latest core update finished rolling out September 3, 2024. It’s too early on to determine what was affected. However, based on the last core update, it can be assumed that content is still a priority.

How Often They’re Done: 2-3 times per year (2023 had a record of four core updates)

Spam

Spam updates help reduce search spam, which can accidentally inflate KPIs. If you frequently look at your Google Search Console, you may notice spikes in clicks or impressions one day. Many times, this is caused by spam searches.

The most recent Google spam update was completed on June 20, 2024.

How Often They’re Done: 2-3 times per year

Reviews

This is specifically related to consumer reviews. Each time this update occurs, it’s essentially Google trying to ensure high-quality reviews showing first-hand experience are emphasized rather than potentially fake or unhelpful reviews.

So, if a new skincare product launches, Google’s goal might be getting dermatologist reviews to appear first, rather than from some random girl named Becky who lives in Utah and just wants to try something new. A dermatologist is a skincare expert who can provide some insight as to who can use the product and who should stay away from it, whereas Becky is just…Becky (sorry).

The last product review was completed on December 7, 2023.

How Often They’re Done: 2-3 times per year

Helpful Content

I’m tired of finding the same copy-and-paste articles on different websites. Turns out, Google is, too. So, in 2022, they started pushing helpful content updates, which aim to improve search engine results pages (SERPs) by only populating reliable and helpful content to the reader. Of course, Google still isn’t perfect and populates questionable content, and likely will for quite some time, but they’re working on it.

The last official helpful content update was completed on September 27, 2023. As of 2024, helpful content updates will no longer be standalone and will instead be incorporated into core updates.

How Often They’re Done: 1-2 times per year

Predicting & Identifying Algorithm Updates

Predicting

- Look at Trends (Slightly Unreliable): Google has a history of algorithm updates on its website. You can view this list to analyze potential trends. For example, based on recent history, you could expect a core update earlier in the year (no later than March) and another one in late summer/early fall, in August or September. Of course, Google doesn’t have a set schedule (that we know of), which makes it hard to predict when a core update will happen. Last year, I thought Google would have another September core update, but they surprised me by releasing a core update in August and another one soon after in October and then November.

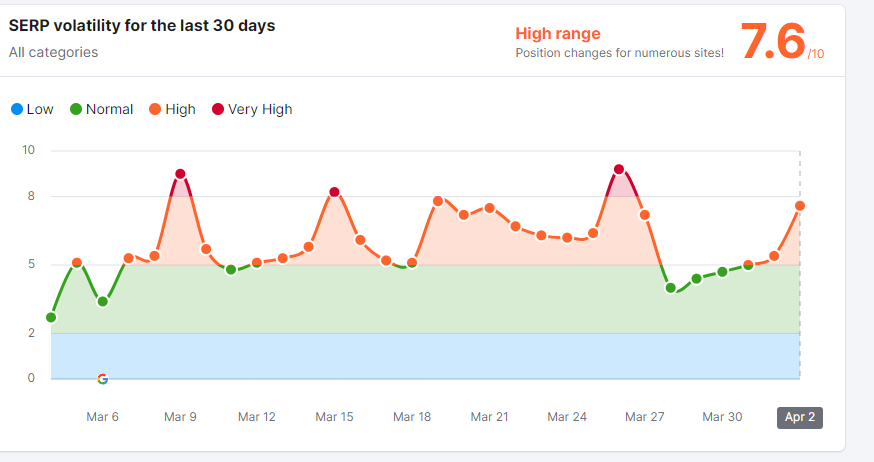

- Look at Volatility Trackers (More Reliable): Your SEO company most likely has some tool with a SERP volatility chart. I use SEMrush to look at SERP volatility within the last 30 days. The newest core update has been in progress since March 5, and if I look at SEMrush, it shows very high SERP volatility. High SERP volatility usually means Google is testing something. I call this a slightly reliable method because sometimes high SERP volatility is just Google testing for a few days and does not indicate an algorithm update. Still, sometimes, it’s a good predictor that an algorithm update is on the way, if there’s not one officially in progress.

Identifying

- Look at Google’s Status List: The easiest way to identify an algorithm update is to go to Google’s status updates list. This is the best way to know when an update has rolled out and the expected duration.

- Sign Up for Newsletters: If you sign up for SEO-related news or read a lot of SEO news, you will be promptly blasted with about 25 articles announcing that a new algorithm update has just rolled out. The downside is that the news articles are sometimes released several days after an update has rolled out or you receive the news alert a few days after the article was published.

The SEO Side Effects of Algorithm Updates

In my experience, algorithm updates can go one of two ways for clients:

- Client A: Experiences minor turbulence but otherwise emerges on the other side totally unscathed.

- Client B: Rankings immediately drop. Panic ensues.

Whether you’re Client A or Client B in this scenario, it pains me to say this: there is no way to prevent an algorithm update from impacting your site. No matter what anyone tells you, there is no “secret sauce.” It’s just the way it is.

Algorithm updates are most likely going to affect your keyword rankings, but you may also notice a drop in organic traffic, clicks, and impressions. Conversely, if Google thinks your website is extremely helpful to users, you may notice a growth in rankings, organic traffic, clicks, and impressions.

What Does Google Prioritize Now After the Latest Algorithm Update?

Content Accuracy

With the growth of AI-generated content and potential misinformation, Google will continue emphasizing content accuracy.

Google will most likely determine content accuracy based on the following factors:

- Publication Date: The date determines relevancy/timeliness. For example, if this blog post was just about Google’s history of updates but hadn’t been updated in a year, it’s no longer an accurate or relevant article.

- Author: Who is writing the content? Readers want to know, and Google does, too. While I don’t think adding a random author to a blog post will boost rankings immediately, I think it matters in establishing authority and expertise. If the content were written by an expert in the field or at least edited by someone with experience, Google would consider that content more trustworthy and accurate than a content piece with no clearly defined author. You can read this blog I wrote for more details on how authorship can aid in boosting website authority.

- References: Does the article also reference or link to high-value resources? This may indicate to Google that some research was done to ensure the content was accurate.

- Peer Editing: When you were in college, you were probably taught to only reference peer-reviewed resources. I can see this being a similar situation with Google, where content that has been written and evaluated by people within the industry is deemed more valuable than ones that aren’t.

- First-Person Experience: Expertise isn’t the only factor Google considers when determining content value and accuracy—Google may also emphasize first-person experience. So, if I’m searching for clues as to why my Chinese Perfume Plant suddenly has brown leaves, I may get some Quora or Reddit forums popping up from people asking similar questions. In forums, there may be people who have gone through similar experiences and can share tips.

Search Intent

In addition to content accuracy, I think Google will continue to analyze search intent to determine if the content meets user expectations. For example, some keywords, like ‘concrete stain’ or ‘hydraulic pump,’ will most likely have a high commercial intent, meaning that when people are searching for these terms, they’re looking to review and purchase products.

There could also be a mix of commercial and informational content in some situations. So, with concrete stains, maybe users want to buy concrete stains and find resources that tell them how to help apply the stain. Google is always looking for ways to improve the SERPs to meet user expectations, so if Google thinks people are looking for product pages most of the time, they will emphasize product pages in the SERPs. In other words: you will likely not rank for that keyword if you don’t have product pages.

On-Page Experience

Annoying pop-ups, slow loading, and questionable design choices (think black background with yellow text) are all effective methods of driving users away. Google has already said that on-page experience is a ranking factor and is measured with their Core Web Vitals metrics: CLS (Cumulative Layout Shift), INP (Interaction to Next Paint), and LCP (Largest Contentful Paint).

- CLS: Does the layout shift at all? Sometimes, when a user visits a website from their cell phone instead of their desktop computer, the website can start doing some weird stuff like cutting off text, which is caused by the layout shifting when it shouldn’t be. All websites should be responsive or built to seamlessly adapt to different screen sizes.

- INP: This is a relatively new metric—it used to be called FID, or First Input Delay. INP measures overall responsiveness. If a user clicks on a link, button, or video playback control, the idea is that there shouldn’t be any delay. If there are significant delays, your on-page experience is poor.

- LCP: This refers to how quickly the largest element on your web page or blog, whether that’s video, text, or an image, loads. The faster, the better.

So, I think Google will continue to prioritize this by ensuring that pages with poor user experience don’t rank high.

Google Priority Predictions:

- Content accuracy

- Search intent

- Pages with good user experience

Can You Prepare for an Update?

Yes, you absolutely can. The two most effective ways to prepare are to perform monthly technical and annual or bi-annual content audits of your website.

Technical Audits

When reviewing your website, look for the following:

- Indexing: Have you recently added new web pages or blogs to your website? Has Google indexed them? You can check this on Google Search Console. If they’re indexed, they’re searchable/findable on Google. If they’re not, they can only be viewed if a user visits your website. Also, check for content that is labeled Noindex. Was it done by accident?

- Page Speed Issues: Page speed is always going to matter to users. If your website is slow, it needs to be corrected as soon as possible.

- Sitemap: If you’ve added a significant amount of content to your website, your sitemap needs to be updated and resubmitted to Google so they understand your website’s architecture/general structure.

- Robots.txt: Does Google (or other search engines) have access to your robots.txt file? This file links to your sitemap and tells Google’s (and other search engines) crawlers which pages they can access. If your robots.txt file doesn’t work, it could cause significant indexing issues.

- Website Errors/404s: Every website will have some 404s, and Google has mentioned that that’s okay, but it becomes a problem when high-traffic or top-performing content gets taken down with no redirects or if content pieces on your website link to resources that no longer exist. When setting up redirects, find a resource that most accurately replaces the one that no longer exists. Don’t link to commercial/sales content if the content is informational. That’s not helpful for the user. Don’t redirect to the homepage either, as that’s also not helpful to the user. Don’t set up a redirect if you don’t have a resource that effectively replaces the one that doesn’t exist. Instead, remove any internal links within the website that take users to the non-existing resource.

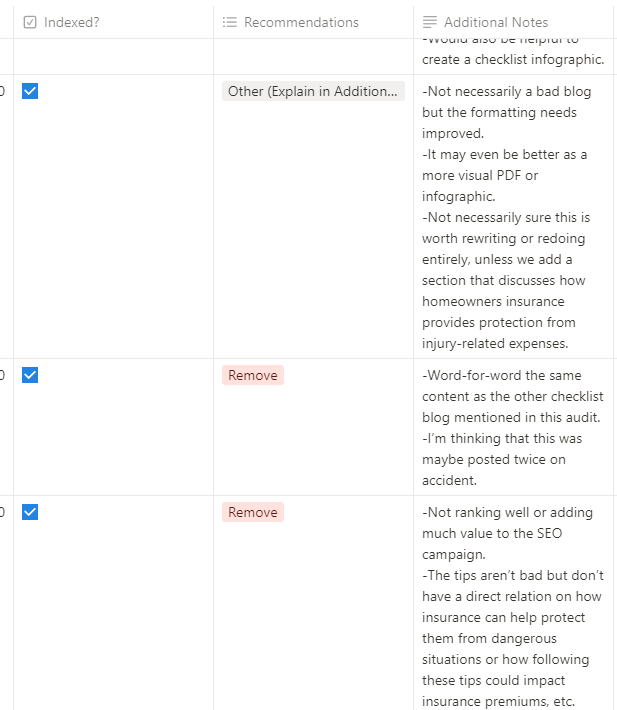

Content Audits

The frequency of your content audits will depend on how often you regularly put out content. A good rule of thumb is to perform an audit every six months. When I perform content audits, I look for the following information:

- When was the content written? Could it be updated? Is the information no longer relevant?

- Is the content ranking for any keywords?

- Does the content match the overall search intent?

- Does the content accurately answer any questions it poses?

- Does the content get straight to the point, or is there a lot of fluff that could put off readers? You can still have fun with content, but you need to answer their questions as soon as possible. If you pose a question like “Can you recover from an algorithm update?” say yes, no, or maybe first, then provide an explanation, not the other way around.

- Does the overall structure of the content make sense?

- Can readability be improved in any way?

- Are people engaging with the content piece? It’s great if it’s getting a ton of traffic, but what’s the point if people drop out after 10 seconds?

- Does the content have any backlinks? Repeat this after me: Do not purchase backlinks. Backlinks aren’t the answer. It’s cool to have them, but you must also assess the quality. A bunch of low-quality backlinks aren’t going to improve the trustworthiness of that particular content piece or your website as a whole.

- Is the content relevant to the business? Don’t try to rank for keywords irrelevant to your business and the products or services you offer. I know that probably seems common sense, but…well, let’s leave it at that.

- Could the content be repurposed in any way? Maybe it would look better as an infographic or image. This part is subjective and based on the person doing the auditing.

- Is the content linked anywhere, or is it an orphan page? Orphan pages can still be indexed and found online, but it just means that it will be impossible for users to access the information from your website. All content pieces should be internally linked on your website somewhere in a way that makes sense for the reader.

- Is the content indexed? If it isn’t, why?

- Is there duplicate content? Are there other resources on the website that accomplish the same goal? Can similar content pieces be consolidated?

What Should You Do After an Algorithm Update?

The best thing is to wait after an update. Some businesses make the mistake of freaking out and then demanding a website rebuild or other extensive changes. The good news is that many websites recover quickly after an algorithm update. The bad news is that if you were penalized for hosting unhelpful content, it could take three months, and possibly more time, to recover because you need to prove to Google that you’re committed to publishing only helpful content.

Some general best practices you can follow after an update (besides waiting) include:

- Perform a content audit. Analyze the pages that were hit the most regarding traffic and ranking drops. Can you find any patterns?

- Remove thin content that isn’t serving the user or is irrelevant or outdated. Remember to update hyperlinks or set up redirects when possible. Setting up a redirect isn’t necessary if the content was never indexed and/or has no internal linking.

- Ensure future content, if generated by AI, is created in collaboration with an expert or edited by someone with experience. Remember—AI tools are language-based models. The output is only as good as the input. If accuracy is a concern, ensure the information you provide comes from an industry expert. All AI-generated content should also be edited for accuracy and general user experience.

- Make a better effort to improve content quality and trustworthiness. Don’t get all of your content ideas from ChatGPT. Look at your audience—what do your customers (or potential customers) frequently ask you? Can they find answers to those questions on your website? More importantly, you need to have an actual content plan. Don’t post content just to have content on your website.

- Evaluate link offers. You may get cold emails asking for link exchanges. Evaluate those and don’t immediately agree to the exchange. What content do they want to be linked? Is it relevant to what you do? Would the content add value to your website users? If not, say no thanks and move on.

- Strategize guest posting. Guest posting is an excellent way to earn free (potentially high-quality) backlinks. However, if you’ve already published this content on your website, don’t try to get it published elsewhere. You’re just creating duplicate content. Likewise, if someone wants to write a guest post on your website, evaluate them. Who are they, and do they have topical authority? Does the content piece they’re proposing already exist on your website to some degree?

- Fix technical errors, including 404s, as they come up. As I mentioned earlier, this is especially crucial if they’re important resources for the website user.

- Continue disavowing spammy backlinks. Disavowing can be done in Google Search Console—it’s basically your way of saying, “I do not want this backlink. I did not ask for this backlink.” I’ll say it again—more backlinks=not better. Think of it as a property value issue. Maybe you’ve spent all this time keeping your home’s exterior fresh, but then someone moves in next door and lets their front yard turn into a gathering spot for all the neighborhood’s raccoons. That impacts your property value. The same happens with backlinks. If you have many questionable websites sending signals to your website, Google may eventually think that your site is questionable, too.

- Keep your Google Business Profile updated, especially if you’re a local service business. I recommend checking it at least once a week for new reviews (make sure you respond to them!), adding updates every now and then (especially when you have promotions and other special offers), and checking your services list monthly to make sure Google didn’t add anything questionable (yes, it happens. Google keeps adding Trade Show Marketing as a service Momentum offers that I keep removing).

- Have an internal linking strategy for your content. You shouldn’t have any orphan pages on your website. When creating a new content piece, think about where you can link it on the website. It needs to make sense; you can’t just slap a link on a page and call it a day. For example, if you have a blog post about lawn fertilization, you may want to link your lawn fertilizer product category page on it.

- Focus on brand awareness/brand advocacy. I’ve seen a lot of businesses disavow direct searches, but they really do have an impact on search/online visibility. If people search for your business name frequently, it sends a signal to Google. Think about places like Home Depot, Amazon, Ace Hardware, and Lowe’s. They almost always dominate the DIY space because so many people search for their websites specifically to find products. It doesn’t mean you need to spend thousands of dollars on billboards or commercials; just don’t underestimate the power of a good PR or brand campaign.

Get More Tips From Momentum

Hopefully, this blog answered all the questions you had about algorithm updates. If you’re looking for more SEO tips and advice, please visit our blog. You can also schedule a consultation with our experts if you’ve noticed significant ranking or traffic drops and can’t pinpoint why it’s happening.